Script / Documentation

Welcome to Just Facts Academy, where you learn how to research like a genius.

Remember, you don’t have to be an Einstein to be a great researcher. You simply need to put in the effort and apply the 7 Standards of Credibility that we share in this video series.

Today’s lesson: Raw Data.

Let’s define raw data, explain why it’s essential by using a real-world example, and show you how to use this standard in your own research.

Raw data simply means the actual numbers, procedures, and formulas used—the original information before descriptions are given and conclusions are drawn.

Why is this so important?

Because people are people. They make mistakes, jump to false conclusions, and they’re not always honest. By examining the raw data, you can lessen the chances that you’ll get fooled by misinformation and then spread it.

This is more than just a grade. Misinformation can harm and even kill people. That’s why books about academic integrity emphasize that researchers should be completely transparent with their sources, facts, data, and methods so that other people can readily check their results.[1] [2] [3] [4]

Real transparency requires raw data.

Here’s an example of this in action: Back in 2010, a scholarly journal called the American Economic Review published a groundbreaking study based on more than 2,000 data points. It found that nations with large national debts had poor economic growth.[5] This is important because poor economic growth goes hand-in-hand with hunger, homelessness, poverty, short lifespans, and low levels of education.[6]

Three years later, however, researchers from the Political Economy Research Institute found a significant error in the study. They were able to do this because the authors of the original study made the raw data publicly available.[7] [8]

Mistake fixed. Problem solved, right? Not even close!

You see, the researchers who discovered the error ran new calculations but then buried their results and distorted them.

Stick with me here, because this is shocking and provides a great lesson.

In the opening paragraph of their study, these scholars claimed that economic growth “is not dramatically different” whether national debts are low or high.[9]

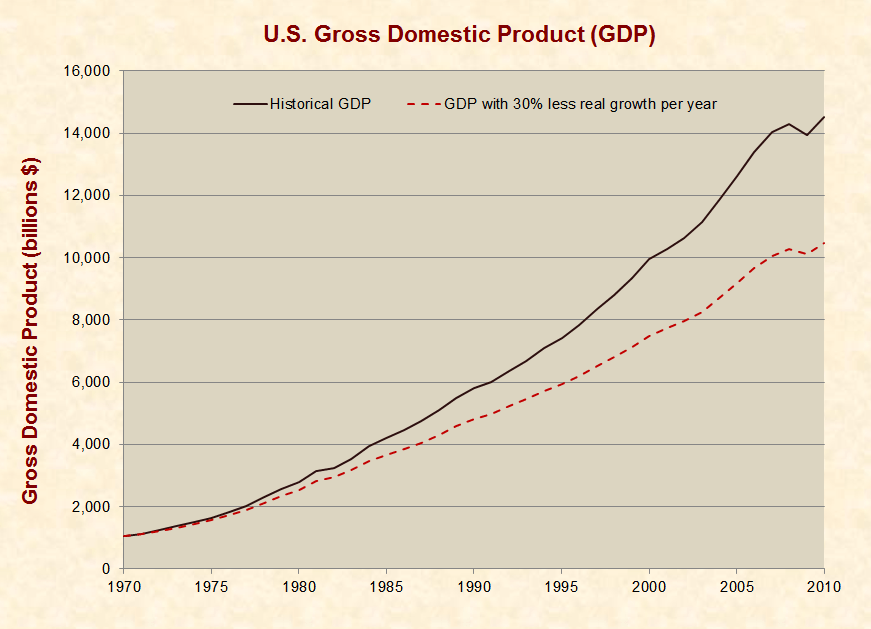

Wait a second? What do they mean by “not dramatically different?” Well, one has to dig 10 pages into their study to find the actual data. And guess what it shows? The same basic result as the original study: Economic growth in nations with high levels of debt averages about 30% below those nations with moderate levels of debt—and nearly half as low as nations with low levels of debt.[10] [11] [12]

Yet, instead of examining the raw data for this study, media outlets widely parroted the authors’ misleading summary.[13] The end result? Citizens and policymakers were fed a blatant falsehood about an issue with life-or-death implications.[14]

And due to the compounding effect of growth rates, this can cause real damage over time.[15]

Now that you see the importance of raw data, let’s talk about how you can apply it in your own research.

First, be very skeptical of anyone who does not publicly share their raw data or places it where few people will see it. No matter how impressive someone’s academic credentials may be, they are not above making mistakes or being deceitful.

Even the peer-review process, which is called the “gold standard” of academic integrity, has produced thousands of papers that have been retracted due to errors and deliberate fraud.[16] [17] [18] [19]

Bottom line: When people don’t readily share their raw data, it’s a big red flag.

Even if you don’t have the math skills to check their work, other people do. And when raw data is available, everyone can check everyone else’s results. This is how good science works.

Second, don’t settle for subjective descriptions like “not dramatically different.” When you read something like that, your first thought should be, “What are the actual numbers?” And your next question: “Are there margins of error on those numbers?” and if so, “What are they?”

Third, make the time and effort to find the raw data and vet it. Raw data doesn’t just consist of numbers but also statements and events. Journalists are infamous for quoting people out of context, and scholars often twist statistics, so don’t blindly trust them.

Instead, read full transcripts, watch the entirety of uncut videos, read full studies, and scrutinize the data before you come to a firm conclusion.

What if you don’t have the time to do that? No one can force you to have an opinion, so remain agnostic and fight the common tendency to uncritically accept anything that aligns with your current views.

The great scientist Louis Pasteur displayed keen insight into human nature when he wrote that “the greatest aberration of the mind is to believe a thing to be, because we desire it.”[20]

Take note—none of these 3 steps requires a high IQ or a college degree. They only require some work and intellectual honesty.

So apply the Raw Data standard and the rest of Just Facts’ Standards of Credibility, so you can research like a genius.